An academic colleague of mine recently tweeted that Netflix’s Okja was worth watching. He is not an agricultural scientist or breeder, but he is a geneticist, and I was surprised that the image of Okja did not trigger his scientific angst. I realize Okja is a science-fiction fantasy movie – but the science makes no sense. The most glaring problem for me is that for some reason, the movie makers who had a budget of $50 million, decided to reimagine a female of a litter-bearing mammalian species that normally has two rows of nipples, and give her a random goat udder with two pendulous nipples that appear to be slighly engorged with milk despite the fact she has never given birth to any offspring. Why??

Okja Credit: Netflix

According to an interview entitled “How the ‘Okja’ VFX Team Created the Creature That Turned Us All Vegetarian” with Okja’s visual effects supervisor, Erik De Boer

“Since Okja was designed as a GMO, she had to come across as a believable meat producer. So it was very important for us and for Bong that she had a very healthy and luxurious feel to her skin and her mast. You could harvest a lot of pork from her”. Erik De Boer

For those of you that have not seen Okja, the premise of the movie begins with an evil biotechnology company that starts with M…..no not that one…..(get it? wink wink). It is Mirando Corporation, and they have been surreptitiously using genetic engineering to breed a special kind of “super pig”. According to Mirando CEO (Tilda Swinton) the “super pigs will be big and beautiful, leave a minimal footprint on the environment, consume less feed, and produce less excretions, and most importantly they need to taste F#$%#@$ good.”

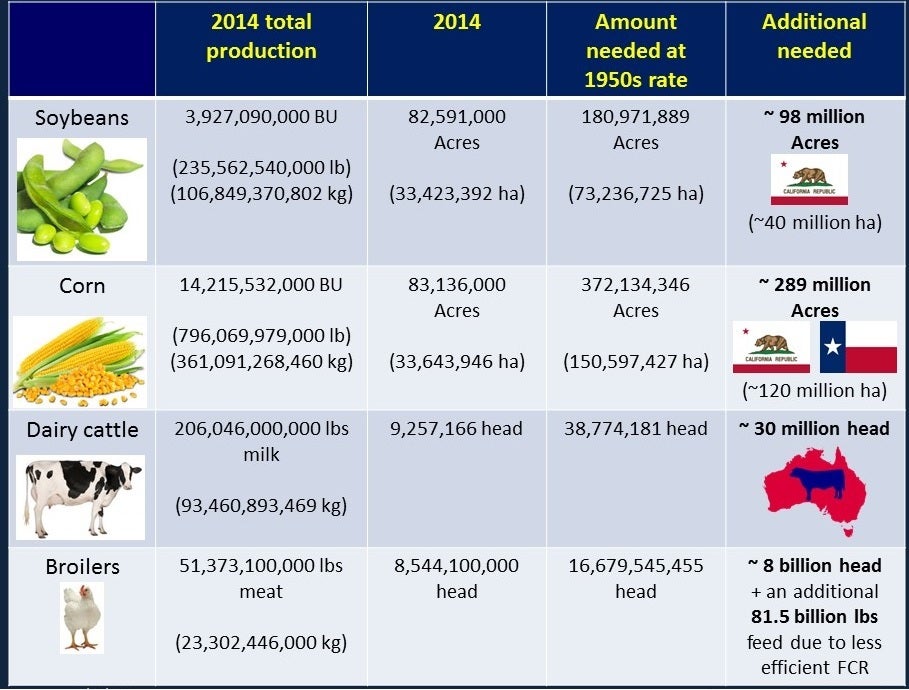

I guess the “F” bomb had to be in there for dramatic effect. Because the rest of those goals are a pretty close estimate of the overall bereding objective of probably every animal breeding company in the world. Probably disease resistance would be in there too. And before you write off this as being irrelevant to your life, what would be the implications of having pigs and cows and chickens that leave a maximal footprint on the environment, consume more feed, and produce more excretions? In other words what would happen if there were no genetic improvement programs for food animal species?

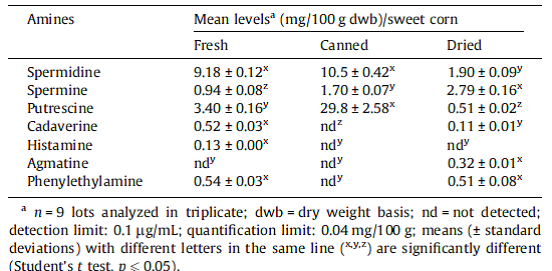

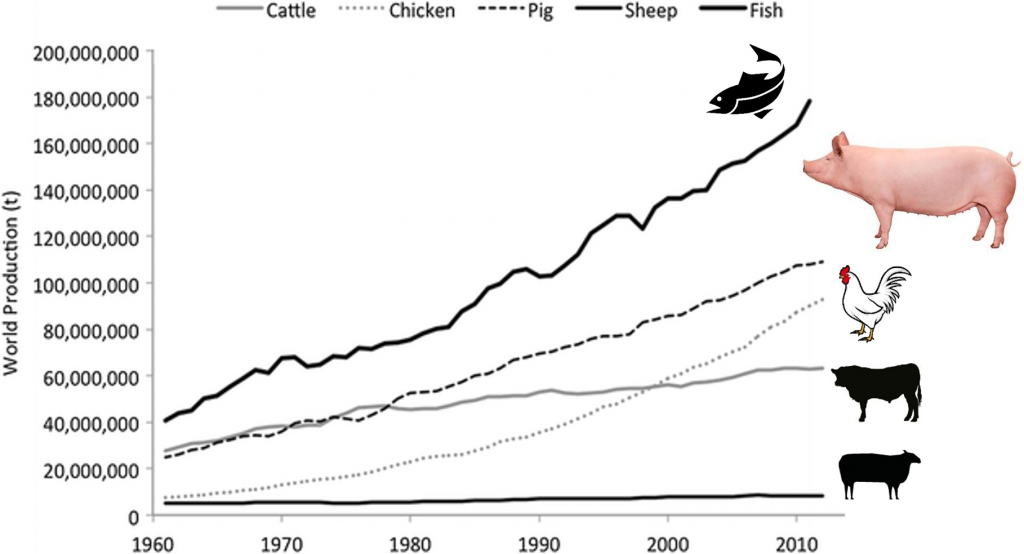

Let’s consider pigs, the second largest provider of meat after fish (wild & cultured).

Over the years selection goals for pig genetic improvement programs have included

- Increased litter size

- Increase the number of litters per year

- Increase the amount of lean meat (pork/bacon) per pig

- Decrease the amount of time needed to get to market weight

- Improve the efficiency of feed digestion (feed conversion ratio)

- Decrease the feed needed to produce a finished pig (increase growth rate)

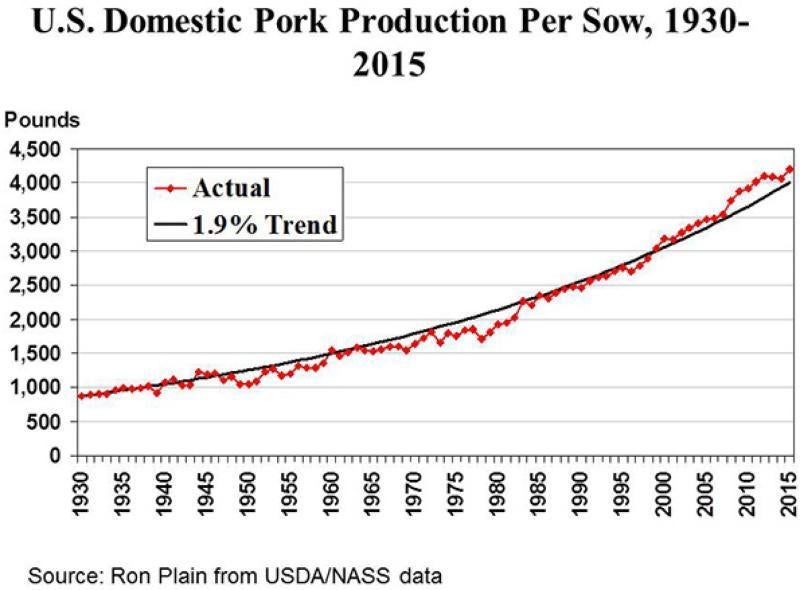

And as a result of those goals the US industry has improved the pork production per sow more than 5 fold, from 800 lb in 1930 to 4,200 lb in 2015 .

Without these productivity improvements over the past 85 years, it would take an additional 25.5 million sows (approximately 31.5 million in total) compared with today’s 6 million sows to achieve the current level of US pork production. Now you might not eat pork for personal or religious reasons, or even any animal protein at all – but as long as some people on earth do, there is a strong environmental agrument to be made for genetically improving the plants and animal species we use for food production. And the way to achieve this is not 6-ton females with goat udders.

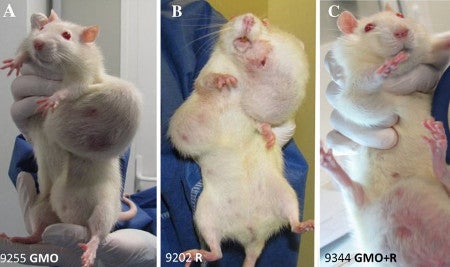

And really this is where Okja loses the plot to me in more ways than one. This so called super pig that was meant to come across as a believable meat producer took 10 years to reach her 6-ton maturity weight (actual pigs take 5-6 months and weigh around 1/8 ton), and was still barren after a decade. Most sows have more than two litters per year – on average marketing a total of around 22 piglets/sow/year . See the rows of teats on this litter-bearing pig below?

No udder on her – and she has a snout not a hippopotamus face, and a curly tail. That is what makes a pig. And her feed conversion (feed/gain) ratio is 2.6; down from 3.2 just 25 years ago – making her “consume less feed” which is a good thing – would you prefer a Prius or a Hummer in terms of fuel efficiency and the resultant environmental footprint of food production systems?

According to Okja’s writer and director, Bong Joon-ho in an interview with Vulture

“I don’t think of Okja as a metaphor. It doesn’t have any symbolism of any kind. I simply want to make the audience think that this animal is something that could happen in the very near future, like five years from now. In reality, there are such animals being developed. They’re developing a genetically modified pig.” Bong Joon-ho

Yes “they” are developing a genetically modified pig, well actually public sector scientists at the University of Guelph developed the so-called Enviropig, more than a decade ago, a pig that had a 75% reduction in undigested phosphorus in its manure. It literally was a pig that “produced less excretions” to avoid phosphorus pollution. But public opposition to genetic engineering, fueled by activist fearmongering and dare I say the long lasting impacts of science-fiction movies like Jurassic Park, have effectively kept such pigs from commercialization. We have over a billion pigs being reared globally, and they still are unable digest inorganic phytate , and so the inorganic phosphorus pollution problem in their manure still exists. Precluding access to the Enviropig did not make the pig poop phosphorus pollution problem go away, it just precluded one potential solution.

Recently public sector researchers at the University of Missouri and the Roslin Institute in Scotland, have produced gene edited pigs that are immune to the Porcine Reproductive and Respiratory Syndrome (PRRS) virus, a devastating disease of pigs. These groups inactivated a protein that was known to be the gateway for this virus to infect pigs.

University of Missouri research team that developed disease-resistant gene edited pigs

Shockingly the public sector researchers that developed these pigs, academic colleagues of mine, look and behave nothing like the deranged zoologist and TV personality Johnny Wilcox in Okja, played by Jake Gyllenhaal, but rather “they” look more like a group of mundane university professors (apologies Kristin, Kevin and Randy) trying to produce disease-resistant pigs to solve an animal disease problem. Sometimes life does not imitate art at all.

In the darkest part of the movie – spoiler alert – Okja is taken by Mirando Corporation to be raped by a super boar for no apparent purpose other than presumably to shock the audience, and then a sample of her flesh is taken by plunging a circular probe into her side. Apparently the non-invasive ultrasound examination that is typically done to evaluate back fat thickness and meat quality was not gruesome enough for the movie makers. And then she, and the 25 other genetically modified super pigs that were developed as a new genetic line, are inexplicably placed in what appears to be a cattle feedyard with 100s of other super pigs (not sure where they all came from as there were only 26 to start with) to be slaughtered for food. Talk about eating your seed corn – that would have been the end of Okja’s genetics as she did not produce a single offspring to carry on her super pig line.

Of course at the end of the movie – spoiler alert – Okja is spared from becoming ham and bacon, and goes off to live the life of a 6-ton pet pig in Korea. That is how fairy tales, even science fiction fairytales, are supposed to end. That is part of the reason that Erik De Boer made Okja pet-like stating

“In terms of Okja’s demeanor and personality, Bong and I always discussed it as a very happy, friendly Labrador. I think we can all relate to that slightly older dog that is just happy to tag along, lumbers a bit, with floppy ears, looking up over its brows. That was the personality we wanted to give Okja: just a very content Labrador inside a super-pig body”. Erik De Boer

I can understand that some people want pigs as pets, but the vast majority of them are grown for food production. Pigs are actually a very important source of meat in Asia, especially China. Over one billion pigs are conusumed annually worldwide, an average of 23 million pigs a week. China, European Union and United States, eat about 12 million, 5 million and 2 million pigs per week, respectively. That is the source of all breakfast bacon and holiday hams.

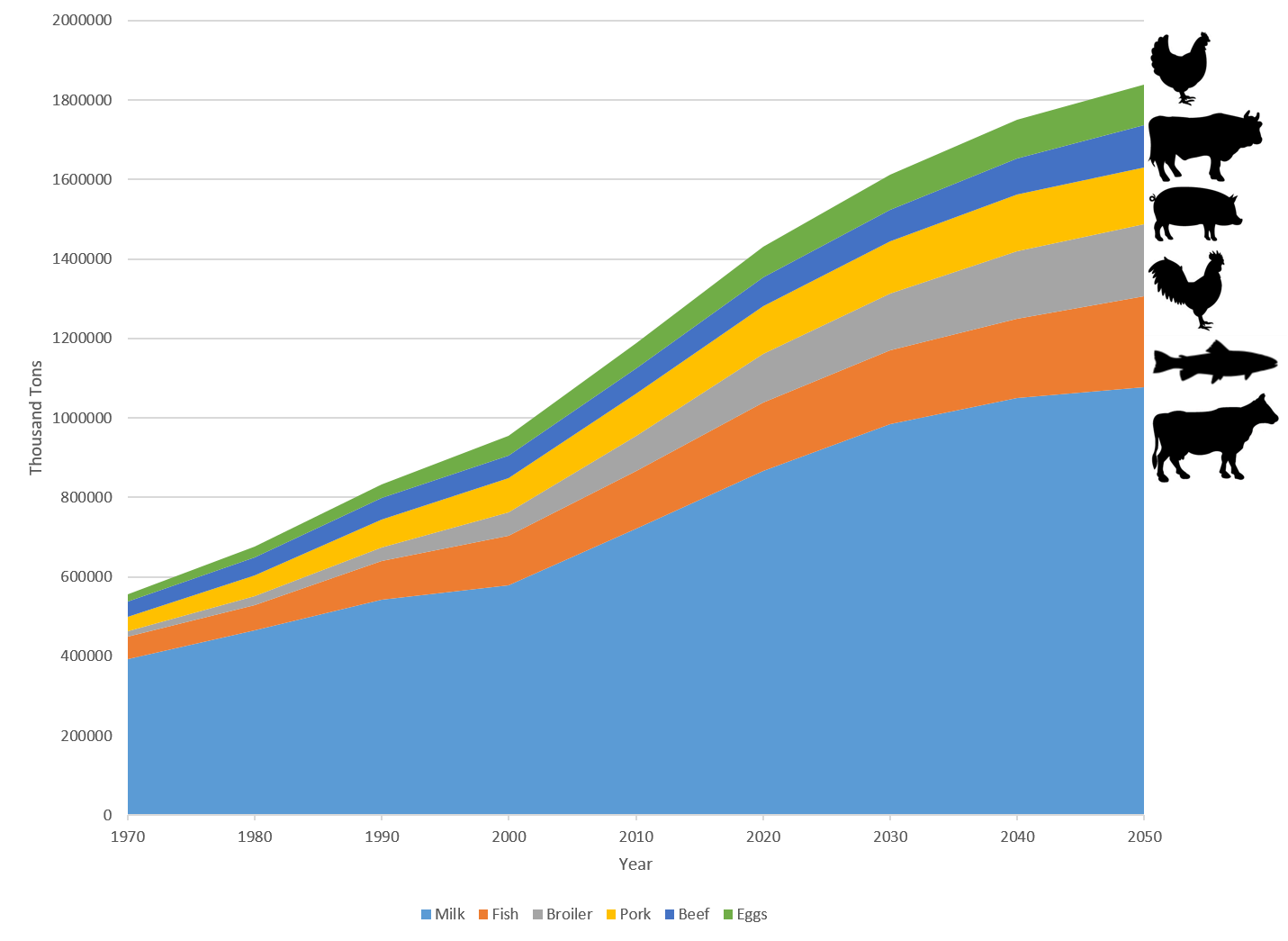

So while it is fun to imagine a pet 6 ton pig, it is also important to be seriously cognizant of the 2050 projections shown in the figure below.

Milk, egg, broiler, beef and pork production since 1970 and projected to 2050 (FAOSTAT 2030 report & database as of 2017.

Ultimately, the livestock sector collectively will need to produce more with less while enhancing health and welfare for humans, animals and the environment. Okja can demonize this objective, and promote a pigs as pets fairytale, but livestock play an important role in global food security today, providing essential micronutrients and high quality protein to billions, and they provide important contributions to livelihoods and economic opportunities for many as well as providing draught power, manure for crop production and many by-products.

I wish science fiction movies and fairytales could do a better job of “sciencing the shit” out of agriculture and food production, in the way that Mark Watney was able to use innovation to get off Mars in The Martian. With no apparent sense of irony, towards the end of the interview regarding the complexities of shooting Okja, visual effects supervisor, Erik De Boer emphasized the importance of pushing technology to try to do better in his chosen field.

“From a technology point of view, we’re always trying to push ourselves further and try to do better.” Erik De Boer

There is probably no more pressing problem facing humanity than climate-smart agriculture to feed projected population growth, and it disappoints me that Okja yet again perpetuated the tired old story of scientists as disturbed, money-hungry corporate sell outs. As long as agricultural research continues to be painted in such a negative light, it will be difficult to obtain public support for agricultural technology and the importance of “sciencing the excretion” out of problems facing agricultural production systems.

Perhaps the most unique #scicomm experience I was involved with in 2017, was

Perhaps the most unique #scicomm experience I was involved with in 2017, was

Upon receiving an

Upon receiving an